AI in GxP

What's working, what's risky, and what regulators actually say

Every pharmaceutical quality team is asking the same question right now: how do we actually use AI without risking compliance? The answer is not in vendor promises or regulatory fear – it is in understanding what AI can and cannot do in GxP environments.

+ 32 key sources for further research

Note

This article provides educational context on AI in GxP environments and does not constitute regulatory or legal advice. AI technology and regulatory guidance evolve rapidly – verify current requirements with your regulatory body and legal counsel before implementation.

What does AI actually mean in pharmaceutical quality?

Not everyone means the same thing when they say "AI in GxP." The technology has evolved through distinct phases: rule-based automation → machine learning → generative AI → agentic AI.

Machine learning has been used in pharma for years – elevator systems that learn usage patterns, predictive models based on historical data. These are deterministic: Input A always produces output B.

Generative AI is fundamentally different. It is probabilistic – input A might produce output B, or something completely unexpected. This creates a validation challenge that traditional Computer Software Validation (CSV) was not designed to handle.

Key terminology for AI compliance

Some terms to keep in mind as we move forward:

- Static vs. adapting models: Static models are "locked" after training and do not change during operation. Adapting models continue learning from new data. This distinction is critical. EU Draft Annex 22 forbids adapting models in critical GMP applications because continuous learning creates uncontrolled changes that violate change control requirements.

- Deterministic: Input A -> always Output B

- Probabilistic: Input A -> sometimes Output B.

- Explainability: Can you trace how the model reached a specific decision for an audit? Black-box models create documentation challenges for GxP compliance because root cause analysis becomes virtually impossible.

- Hallucinations: Factually false outputs confidently presented as truth. OpenAI has publicly stated that hallucinations are mathematically inevitable in large language models – this is not a bug, it is an inherent characteristic of how these systems work.

- Drift: Model accuracy degrades as real-world data shifts from training data. This requires continuous performance monitoring with defined re-validation triggers.

The AI x GxP risk landscape

The risk landscape of using AI in GxP can be summed up as:

1. Input risks: Data integrity and governance *Violations of ALCOA+

- Intellectual property issues

- Black-box bias

2. Process risks: AI model reliability, validation, and monitoring

- Non-determinism

- No explainability

- Degradation/drift

3. Environment risks: Supplier opacity, new cybersecurity threats

- Vendor opacity

- Prompt injection

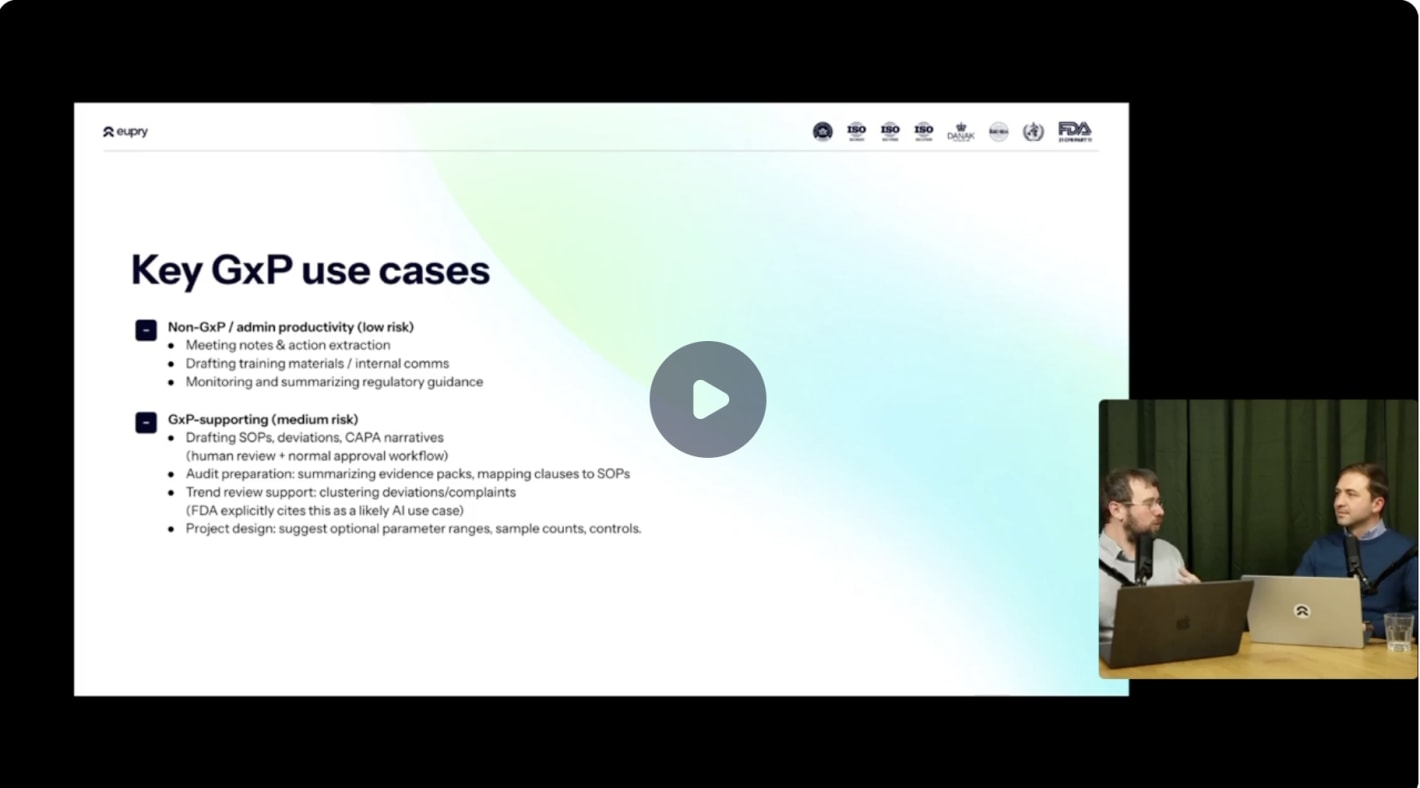

Where is AI already working in pharma and other GxP industries?

Despite risks, pharmaceutical companies are finding real value. The key is matching AI capabilities to appropriate use cases based on patient safety impact.

Low-risk applications (implement now)

Meeting summaries and action extraction: Convert quality reviews, CAPA discussions, and management meetings into structured action lists without manual note-taking.

Training material drafting: Generate first drafts of SOPs and training presentations – always with subject matter expert review and approval before use.

Regulatory monitoring: Track FDA guidance updates, EMA announcements, and industry standards changes across multiple sources simultaneously.

Medium-risk applications (require human review)

SOP and deviation drafting: AI generates initial text based on templates and historical documents. Quality teams review, revise, and approve through normal workflows – maintaining change control while reducing drafting time by up to 70%.

Audit preparation: Summarizing evidence packs, mapping regulatory clauses to procedures, and identifying documentation gaps. The AI organizes information, auditors and quality managers interpret it and make final decisions.

Trend analysis: Clustering similar deviations or complaints to identify patterns. The system flags potential trends – quality teams investigate root causes and determine corrective actions.

FDA perspective: The FDA's 2023 discussion paper on "AI in Drug Manufacturing" explicitly identifies trend monitoring as an appropriate use case, noting that AI can examine complaint and deviation reports to identify patterns and prioritize areas for continual improvement – with proper oversight and human decision-making.

High-risk applications (require rigorous validation)

Advanced process control: Real-time adjustments to manufacturing parameters based on sensor data. Requires extensive validation, performance monitoring, and deviation procedures.

Vision-based quality control: Automated visual inspection of tablets, vials, or packaging. False negatives could release a defective product, requiring comprehensive validation and continuous monitoring.

Real-time clinical trial adaptation: Adjusting dosing regimens based on emerging data. Direct patient safety implications require regulatory oversight and extensive human review.

What are regulators saying about AI in GxP?

In 2026

The regulatory landscape is evolving rapidly with different regional approaches.

EU approach: Restrictive but clear

Draft Annex 22 (expected mid-2026):

- Explicitly forbids "continuous learning" (dynamic) models in critical GMP applications

- Requires AI models to be "locked" (static) and deterministic for critical quality decisions

- Mandates continuous oversight and performance monitoring throughout the model lifecycle

Draft Annex 11 (expected mid-2026):

- Enhanced data governance and audit trail requirements, capturing AI-assisted decisions

- Strengthened system security, including penetration testing and multi-factor authentication

- Explicit supplier oversight requirements for AI-as-a-service tools

EU AI Act (in force 2025):

- Categorizes systems by risk (minimal to unacceptable)

- High-risk in pharma: AI as part of medical devices or IVDs, emergency triage systems, HR systems managing quality staff

- Both "deployers" (users) and "providers" (developers) have compliance obligations

EMA Reflection Paper (2024):

- Risk-based approach based on patient safety impact

- Human oversight mandatory for reviewing AI-generated text

- High-risk models must be prospectively tested with new data (not just historical training data)

Also see: How to meet GMP temperature requirements

US approach: Flexible but principles-based

FDA Computer Software Assurance (September 2025):

- Shifted from traditional CSV to risk-based CSA

- Assessment based on intended use and patient safety impact

- Critical thinking over documentation volume

FDA AI Considerations (draft January 2025):

- Frames AI as most relevant to: process design/control, monitoring/maintenance, trend analysis

- Explicitly states open questions remain around validating self-learning models

- Provides examples of appropriate use cases with required controls

FDA Framework for AI Credibility:

A structured 7-step process: Define question of interest → define context of use → assess risk → develop credibility plan → execute → document → determine adequacy.

This maps reasonably well to existing validation approaches while acknowledging AI-specific challenges like probabilistic outputs and continuous learning.

Also read: Guidelines for 21 CFR Part 11 in pharma

ISPE GAMP AI guidance

The GAMP AI Guide includes the following concepts:

- Quality by design for AI: Building quality into model development, not testing it afterward

- Fit-for-purpose data: Training data must represent actual use and meet data integrity standards

- Knowledge management: Documenting model decisions, limitations, and acceptable use

- Data and model governance: Change control for both training data and model versions

- Model performance monitoring: Continuous validation that accuracy remains acceptable

Critical distinction: The regulated company validates that AI is "built right for this specific GxP use." Infrastructure providers only provide qualified building blocks.

The regulatory consensus about AI use in GxP

Despite different approaches, a range of consistent themes emerge:

- Human-in-the-loop is mandatory for critical GMP decisions

- Locked (static) models are required for high-risk applications (EU explicit, FDA implied)

- Continuous monitoring is essential – validate once and monitor continuously

- Data governance is paramount – ALCOA+ principles apply to AI training data

9 principles for safe use of AI in GxP

+ 32 key sources for further research

Adopt AI in pharma and other GxP operations without compromising compliance. This guide covers the core principles.

How do you implement AI safely in GxP environments?

The previous sections covered the boundaries of the regulatory landscape. This section covers the practical steps to implement AI within those constraints.

Start with risk-based use case selection

Define intended use specifically:

- What decision or task does the AI support?

- Who will use the output, and how?

- What happens if the AI output is wrong?

- Does this directly impact product quality or patient safety?

Vague statements like "improve quality" make validation impossible.

Match validation rigor to patient safety risk:

- Low risk: Supplier assessment, functional testing, user acceptance

- Medium risk: Add change control, audit trail review, periodic accuracy checks

- High risk: Full validation protocol, prospective testing, continuous monitoring with revalidation triggers

Maintain human decision authority

AI as "draft only":

For document generation or investigations:

- AI produces initial output

- Subject matter expert reviews, revises, and approves

- Human signs as author – audit trail captures both AI contribution and human review

AI as "recommendation only":

For trend analysis or optimization:

- AI identifies patterns or suggests actions

- Quality team investigates independently

- Documentation explains the reasoning for accepting or rejecting AI input

Prohibited uses:

AI should never approve batch release, close deviations, override alarm conditions, or make regulatory submissions without human review and approval.

Ensure full traceability

For each AI-assisted decision, capture:

- User, timestamp, exact prompt/input

- Complete output received

- Model version and configuration

- Human decision made based on AI output

This creates reconstructability – the ability to replay any AI-assisted decision during an audit.

Regularly review AI interactions for unusual patterns, potential bias, hallucinations, or drift indicators. Treat AI audit trails like any other GxP system.

Also read: Complete guide to thermal validation

Apply adapted validation approaches

For locked (static) AI models:

Modified traditional validation works:

- Supplier assessment, including security and data governance

- Requirements specification for your specific context of use

- Performance qualification with representative data from your environment

- Acceptance criteria (define minimum accuracy, precision, recall)

- Ongoing monitoring to detect drift

For probabilistic AI models:

Traditional pass/fail testing does not work. Instead:

- Define acceptable error rate: "95% accuracy on SOP retrieval" not "100% correct"

- Test with diverse inputs, including edge cases and ambiguous queries

- Establish performance metrics: precision, recall, F1 score

- Set monitoring thresholds that trigger revalidation

- Plan for failure response: how will you detect and respond to hallucinations?

Implement continuous monitoring and change control

Track model performance:

Establish baseline during validation, then monitor:

- Accuracy on representative test cases

- Error rates by query type

- User satisfaction scores

- Frequency of human overrides

Define revalidation triggers:

- Model accuracy drops below threshold (e.g., 95% to 90%)

- Model version or configuration changes

- Training data updates

- Intended use expands to new applications

- Regulatory requirements change

Protect data and maintain ALCOA+ compliance

Training data governance:

Training data must meet ALCOA+ principles – attributable, legible, contemporaneous, original, accurate. Document data sources, cleaning processes, and exclusions.

Operational data protection:

- Use enterprise AI tools with clear data ownership terms

- Verify your data is not used for vendor model training

- Ensure data residency meets regulatory requirements (especially EU personal data)

Intellectual property protection:

- Do not input proprietary formulations or processes into public AI tools

- Implement data classification: what can and cannot be shared with AI systems

- Train staff on appropriate vs inappropriate AI queries

9 principles for safe use of AI in GxP

+ 32 key sources for further research

Based on practical pharmaceutical industry experience, we have outlined 9 principles for safe AI use in GxP.

Frequently asked questions about AI in GxP and pharma

AI in GxP: What's working, what's risky, and what's next

Want to dive deeper into practical AI implementation frameworks? Learn more about AI use in GxP in our on-demand webinar, covering these topics with specific industry examples.

- Examples of AI use cases in GxP today

- The biggest risks and how to spot them

- Questions to ask before deploying AI

And much more